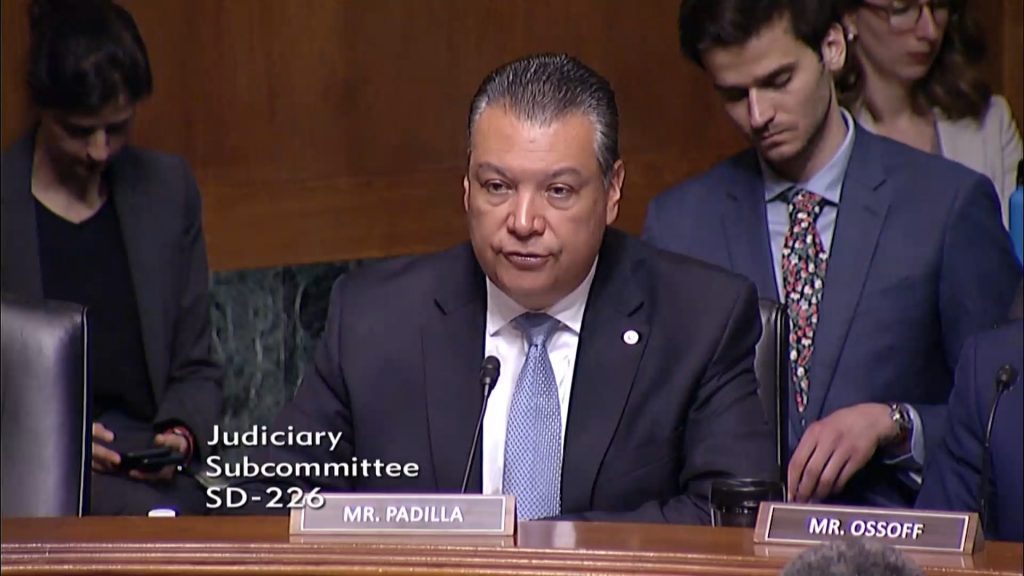

Padilla Raises Inclusivity Concerns at Committee Hearing on Artificial Intelligence

WASHINGTON, D.C. — Today, U.S. Senator Alex Padilla (D-Calif.) joined a hearing of the Senate Judiciary Committee titled “Oversight of A.I.: Rules for Artificial Intelligence” to question witnesses on concerns surrounding inclusivity in the rapidly developing Artificial Intelligence industry. During the hearing, Padilla questioned Samuel Altman, CEO of OpenAI, and Christina Montgomery, Chief Privacy & Trust Officer at IBM. Padilla today also questioned witnesses about Artificial Intelligence at a Homeland Security and Governmental Affairs Committee Hearing titled “Artificial Intelligence in Government.”

Padilla began his remarks by reiterating that AI is not a new technology and emphasizing the importance of exploring its potential benefits while also addressing the potential harms and biases that stem from its development and use. He asked Mr. Altman and Ms. Montgomery to explain how they are ensuring language and cultural inclusivity are a focus of their AI language models, expressing concerns that AI will repeat social media’s failure to ensure equitable treatment of diverse demographic groups.

Ms. Montgomery spoke to her company’s work to prevent bias. Mr. Altman committed to working with government to create inclusive and accessible access to AI, especially for historically underrepresented groups in technology.

Padilla then went on to speak about concerns over the concentration of power in building the largest models and the need for greater workforce diversity and inclusion in the broader AI ecosystem to ensure that new innovations are built for the benefit of all of society. Padilla asked Ms. Montgomery which applications of AI, in addition to generative AI, the public and policymakers should keep in mind as they consider possible regulations. She spoke to the potential for AI technology to create content that is misleading or deceptive and expressed the need for government to regulate it.

Key Excerpts:

- PADILLA: If you never thought about AI until the recent emergence of generative AI tools, the developments in this space may feel like they’ve just happened all of a sudden. But the fact of the matter, Mr. Chair, is that they haven’t. AI is not new, not for government, not for business, not for public use, not for the public. […] I’m, frankly excited to explore how we can facilitate positive AI innovation that benefits society, while addressing some of the already known harms and biases that stem from the development and use of the tools today.

- PADILLA: Now, with language models becoming increasingly ubiquitous, I want to make sure that there’s a focus on ensuring equitable treatment of diverse demographic groups. My understanding is that most research [in] evaluating and mitigating fairness harms has been concentrated on the English language, while non-English languages have received comparatively little attention or investment […] I’m deeply concerned about repeating social media’s failure in AI tools and applications. Question Mr. Altman, and Miss Montgomery, how are OpenAI and IBM ensuring language and cultural inclusivity [in] their large language models. And is this even an area of focus in the development of your products.

- MONTGOMERY: Bias and equity in technology is a focus of ours and always has been, I think, diversity in terms of the development of the tools, in terms of their deployment. So having diverse people that are actually training those tools, considering the downstream effects, as well. […] We don’t have a consumer platform, but we are very actively involved with ensuring that the technology we help to deploy in the large language models that we use in helping our clients to deploy technology, is focused on, and available in, many languages.

- ALTMAN: We think this is really important. One example is that we worked with the government of Iceland, which is a language with fewer speakers than many of the languages that are well represented on the internet to ensure that their language was included in our model. And we’ve had many similar conversations and I look forward to many similar partnerships, with lower resourced languages, to get them into our models. GPT4 is, unlike previous models of ours which were good at English and not very good at other languages, now pretty good at a large number of languages. You can go pretty far down the list, ranked by number of speakers and still get good performance. But for these very small languages, we’re excited about custom partnerships to include that language into our model run.

- PADILLA: I don’t think we should lose sight of the broader AI ecosystem as we consider AI’s broader impact on society, as well as the design of appropriate safeguards. Miss Montgomery, in your testimony, as you noted, AI is not new. Can you highlight some of the different applications that the public and policymakers should also keep in mind as we consider possible regulations?

- MONTGOMERY: I think the generative AI systems that are available today are creating new issues that need to be studied, new issues around the potential to generate content that could be extremely misleading, deceptive, and the like. So those issues absolutely need to be studied. But we shouldn’t also ignore the fact that AI is a tool. It’s been around for a long time. It has capabilities beyond just generative capabilities. And again, that’s why I think going back to this approach, where we’re regulating AI, where it’s touching people and society is a really important way to address it.

More information about the hearing is available here.

###